Article by Davis Jacobson

“Doubt is our product, since it is the best means of competing with the ‘body of fact’ that exists in the minds of the general public. It is also the means of establishing a controversy.”

–Brown and Williamson, 1969 (internal memorandum, found here)

It is often lamented that there are misunderstandings of scientific findings in the general public. There’s no blame there; science is what scientists do, not what non-scientists do. I think people can be forgiven for lacking expertise on whatever subject happens to be under discussion at the moment.

More concerningly, some special interests have played upon these misunderstandings to their own advantage or that of their constituents. This is unfortunate, but it is to be expected in an unregulated marketplace of ideas.

Nevertheless, if a concerned citizen or policy-maker needs to take actual action on science-related issues, it is quite important to place our bets on the best information available, and that information can often fairly be said to come from science.

Science itself can be a source of confusion — if only because scientists and non-scientists don’t always use language in the same ways (or because certain background assumptions differ between scientific and casual speech). Today I’d like to touch on a few words that are often sources of confusion in and of themselves.

“[P]ropositions to which no competent man today demurs.”

Uncertainty:

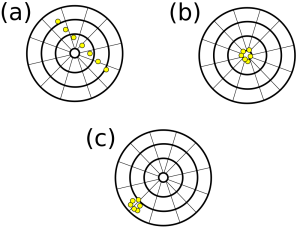

(a) Neither precise nor accurate (b) precise and accurate (c ) precise but inaccurate. Procedural methods can correct for systematic biases like in (a) and (c )

Image: Public Domain

“Uncertainty” is a measurement of the accuracy of measurements, and scientists evaluate it in well-defined ways. They can

- Understand the uncertainty empirically, for example by making numerous measurements with a particular tool and observing how accurate it is in practice (Type A evaluation of uncertainty), or

- They can predict uncertainty based on what is known of the measurement system or experimental method (or common sense): “Because our machine way built this way, it can’t be more accurate than that.” This is Type B evaluation of uncertainty.

Uncertainties are known, quantifiable sources of measurement variation that can be sensibly accounted for (large PDF) in scientific procedure and reporting. There are even scientific studies of uncertainty itself!

Uncertainty is a mathematical entity describing the experimental range of measurement. Uncertainty does not mean doubt.

Error:

In scientific usage, “error” is used in several unique ways, not all of which mean “mistake.” More-commonly mentioned errors include:

- Type I errors, errors in which a “null hypothesis” is rejected while true (for technical reasons, this confusingly results in a “false positive” error), and

- Type II errors, or false negative error.

- Other statistical errors, such as “sampling error.” (Sampling error quantifies the likelihood that a subgroup of a population, for reasons of accident, does not represent the whole population accurately).

- Systematic errors, such as the change in length of a measuring rod with temperature (may be addressed by Type A or B evaluation of uncertainty).

- Random errors (ibid).

Note that the first four usages can all be defined mathematically to mean specific things in statistical studies and can be quantified and accounted for in scientific practice and reporting.

In casual speech, people usually aren’t talking about the results of solving mathematical equations when they say things like, “I made an error,” but in scientific speech, it cannot be assumed that scientists have made a mistake when they report an error.

Confidence:

When all of the known uncertainties and errors in a scientific experiment are accounted for, we can state how confident we are in the result with another mathematical entity called a “confidence interval,” usually represented as a percentage probability. At the 95% confidence interval, there is a 95% chance that the mean of measurements interpreted to confirm a hypothesis falls within the range of values that would confirm that interpretation if the experiment were repeated. Here is a short video describing how to calculate a confidence interval.

Establishing high degrees of confidence with careful procedure and interpretation reduces the incidence of Type I and Type II errors. (The p-value measure of confidence is also becoming popular, but is beyond the scope of this article.)

Scientific studies are usually not published unless their findings are meaningful at the 95% confidence interval. In any event, there are strong conventions to report the confidence interval of a scientific report, whatever it may be. Here is a paper that explains why the confidence interval should be reported in medical papers, and assumes reporting at the 95% level.

The careful ways in which scientists account for error and uncertainty, and the honesty they demonstrate in disclosing their uncertainties and confidence, persuade me that their reports are trustworthy. In and of itself, this distinguishes science from other ways of knowing: knowability itself is a knowable quantity.

Re.: The comment that, “I think people can be forgiven for lacking expertise on whatever subject happens to be under discussion at the moment.” AGREED!

However, IMO, it is of considerable import to understanding for people to try to be in possession of the most elementary of the basics of scientific methodology, namely the need for observability.

Would that be too much for even the least erudite of those amongst whom the rest of us dwell?